The history of computing is longer than the history of computing hardware and modern computing technology and includes the history of methods intended for pen and paper or for chalk and slate, with or without the aid of tables. The timeline of computing presents a summary list of major developments in computing by date. Computing is intimately tied to the representation of numbers. But long before abstractions like the number arose, there were mathematical concepts to serve the purposes of civilization. These concepts are implicit in concrete practices such as : one-to-one correspondence, a rule to count how many items, say on a tally stick, eventually abstracted into numbers; comparison to a standard, a method for assuming reproducibility in a measurement, for example, the number of coins; the 3-4-5 right triangle was a device for assuring a right angle, using ropes with 12 evenly spaced knots, for example.

-Numbers-

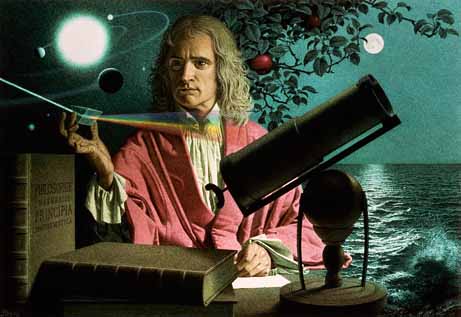

Eventually, the concept of numbers became concrete and familiar enough for counting to arise, at times with sing-song mnemonics to teach sequences to others. All known languages have words for at least "one" and "two" (although this is disputed: see Piraha language), and even some animals like the blackbird can distinguish a surprising number of items.[1] Advances in the numeral system and mathematical notation eventually led to the discovery of mathematical operations such as addition, subtraction, multiplication, division, squaring, square root, and so forth. Eventually the operations were formalized, and concepts about the operations became understood well enough to be stated formally, and even proven. See, for example, Euclid's algorithm for finding the greatest common divisor of two numbers. By the High Middle Ages, the positional Hindu-Arabic numeral system had reached Europe, which allowed for systematic computation of numbers. During this period, the representation of a calculation on paper actually allowed calculation of mathematical expressions, and the tabulation of mathematical functions such as the square root and the common logarithm (for use in multiplication and division) and the trigonometric functions. By the time of Isaac Newton's research, paper or vellum was an important computing resource, and even in our present time, researchers like Enrico Fermi would cover random scraps of paper with calculation, to satisfy their curiosity about an equation.[citation needed] Even into the period of programmable calculators, Richard Feynman would unhesitatingly compute any steps which overflowed the memory of the calculators, by hand, just to learn the answer.

Eventually, the concept of numbers became concrete and familiar enough for counting to arise, at times with sing-song mnemonics to teach sequences to others. All known languages have words for at least "one" and "two" (although this is disputed: see Piraha language), and even some animals like the blackbird can distinguish a surprising number of items.[1] Advances in the numeral system and mathematical notation eventually led to the discovery of mathematical operations such as addition, subtraction, multiplication, division, squaring, square root, and so forth. Eventually the operations were formalized, and concepts about the operations became understood well enough to be stated formally, and even proven. See, for example, Euclid's algorithm for finding the greatest common divisor of two numbers. By the High Middle Ages, the positional Hindu-Arabic numeral system had reached Europe, which allowed for systematic computation of numbers. During this period, the representation of a calculation on paper actually allowed calculation of mathematical expressions, and the tabulation of mathematical functions such as the square root and the common logarithm (for use in multiplication and division) and the trigonometric functions. By the time of Isaac Newton's research, paper or vellum was an important computing resource, and even in our present time, researchers like Enrico Fermi would cover random scraps of paper with calculation, to satisfy their curiosity about an equation.[citation needed] Even into the period of programmable calculators, Richard Feynman would unhesitatingly compute any steps which overflowed the memory of the calculators, by hand, just to learn the answer.-Navigation and astronomy-

Starting with known special cases, the calculation of logarithms and trigonometric functions can be performed by looking up numbers in a mathematical table, and interpolating between known cases. For small enough differences, this linear operation was accurate enough for use in navigation and astronomy in the Age of Exploration. The uses of interpolation have thrived in the past 500 years: by the twentieth century Leslie Comrie and W.J. Eckert systematized the use of interpolation in tables of numbers for punch card calculation. In our time, even a student can simulate the motion of the planets, an N-body differential equation, using the concepts of numerical approximation, a feat which even Isaac Newton could admire, given his struggles with the motion of the Moon.

No comments:

Post a Comment